Keyword [HGD]

Liao F, Liang M, Dong Y, et al. Defense against adversarial attacks using high-level representation guided denoiser[C]//Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition. 2018: 1778-1787.

1. Overview

1.1. Motivation

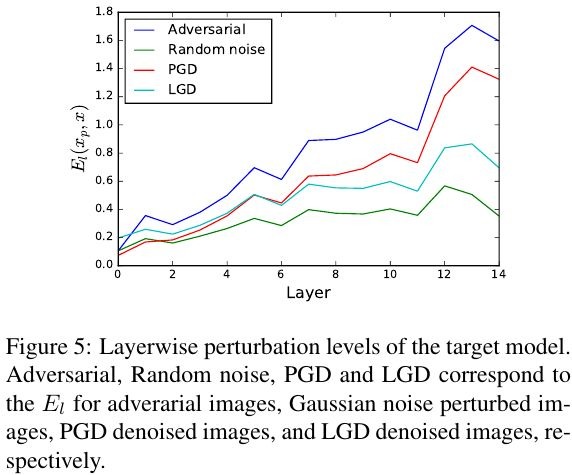

- small residual perturbation is amplified to a large magnitudein top layers of the models

In this paper, it proposed high-level representation guided denoiser (HGD) as a defense for image classification

- more robust to white-box and black-box

- trained on small subset of images and generalize well to other images and unseen classes

- transfer to defend models other than the one guiding it

1.2. Related Works

1.2.1. Attack Methods

- box-constrained L-BFGS

- FGSM

- IFGSM

1.2.2. Defense Methods

- augmentation with perturbation data (time consuming). even improve accuracy of clean image on some datasets, but not found on ImageNet

- preprocessing

- denoising auto-encoder, median filter, averaging filter, Gaussian low-pass filter, JPEG compression

- two-step defense model. detect adversarial input, and then reform it based on the difference between the manifolds of clean and adversarial examples

- gradient masking effect

- deep contrastive network

- knowledge distillation

- saturating networks

2. Methods

2.1. Pixel Guided Denoiser (PGD)

2.2. High-level Representation Guided Denoiser (HGD)

- Feature Guided Denoiser (FGD). l=-2 layer, unsupervised

- logits guided denoiser (LGD). l=-1 layer, unsupervised

- class label guided denoiser (CGD). supervised

3. Experiments

3.1. PGD

- DAE performance significantly drops in clean images

- denoising loss and classification accuracy of PGD are not so consistent

analyze the layer-wise perturbations of the target model activatedby PGD denoised images

LGD perturbation at the final layer is much lower than PGD and adversarial perturbations and close to random perturbation

3.2. HGD

- HGD is more robust to white-box and black-box than PGD and ensV3

- the difference between these HGD methods is insignificant

- learning to denoise only is much easier than learning the coupled task of classification and defense

3.3. HGD as an Anti-adversarial Transformer

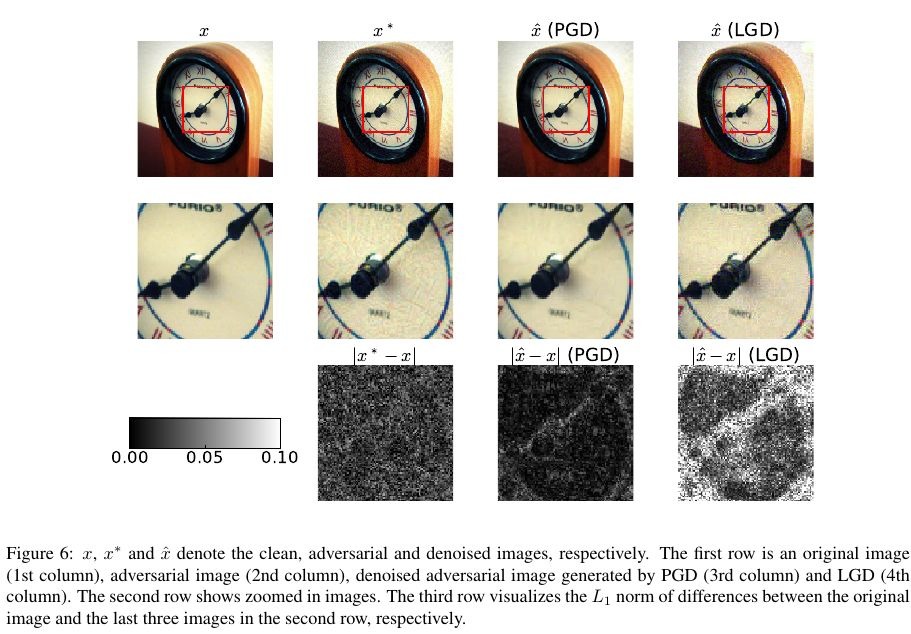

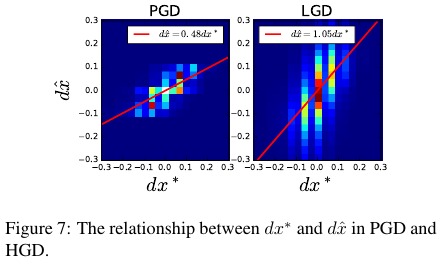

- LGD does not suppress the total noise as PGD does, but adds more perturbations to the image

- *. adversarial perturbation

- ^. predicted perturbation

- the slope of PGD‘s line < 1. PGD only removes a portion of the adversarial noises

- the slope of LGD’s line > 1. the estimation is very noisy which leads to high pixel-level noise